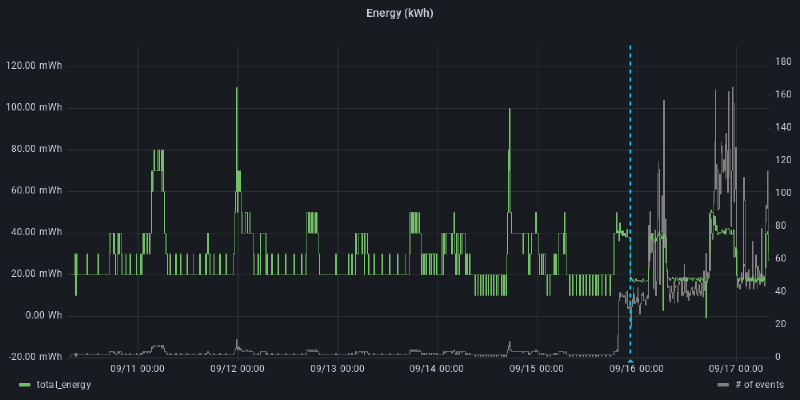

In an earlier post, I made an error that incorrectly aggregated the energy data which resulted in hugely inflated aggregated energy usage. All the un-aggregated data was accurate, but the sums were wrong. Luckily I had all the raw data stored in InfluxDB and could rebuild it.

In this post, I walk through how to re-write the Home Assistant Long-term statistics database to fix this mistake.